How To Use Go Interfaces

I occasionally give free Go consults and code review on top of my daily work. As such, I tend to read a lot of other peoples’ codes. And while this is really more of a feeling *Now, you should go, really? You're a statistician by training ffs, I’ve seen an increase in what I call “Java-style” interface usage.

This blog post is a Go specific recommendation from me, based on my experiences writing Go code, on how to use interfaces well.

For this blog post, the running example will span two packages: animal and circus. A lot of what I write about here is about code at the boundary of packages.

Tuples Are Powerful

Over Chinese New Year clebrations, a friend asked (again) about the curious lack of a particular feature in Gorgonia, the deep-learning package for Go: tuples, which led to this tweet (that no one else found funny :( )

"I follow the teachings of Lambda Calculus turing times of hardship"

— Chewxy (@chewxy) February 17, 2018

and

"I'm a follower of Church of Turing Machines"

are puns that should be considered the epitome of puns.

I may be a bit intoxicated. Just a bit

The feature that was missing is one that I’ve vehemently objected to in the past. So vehemently objected I was to this that by the first public release of Gorgonia, there was only one reference that it ever existed (by the time I released Gorgonia to public, I had been working of 3 versions of the same idea).

[Read More]Term Rewriting Chinese Relatives

Learn Chinese AND Functional Programming At the Same Time

I recently attended QFPL’s excellent Haskell course. Tony Morris was a little DRY*It's a joke. Tony kept mentioning Don't Repeat Yourself and being lazy but nonetheless was an excellent presenter *The course shook my confidence in my existing ability to reason in Haskell for a bit but it was for the better - I had some fundamentals that were broken and Tony explained some things in a way that fixed it... for now - I have no doubt some basics will be lost to the ether in the next few months. So for the rest of the week I was in a bit of a equational-reasoning mode.

Then my dad sent me a cute link to a calculator that calculate vocatives for Chinese relatives. Given English as my first language (hence not default mode of thinking), this kicked me off in to a chain of thoughts about languages and symbols (you’d find a high amount of correlation between my switching modes of thinking and blog posts - the last time this happened, I wrote about yes and no).

One of the difficult things that many people report with programming languages is that the decoupling of syntax and semantics. I’ve often wondered if we might be better off with a syntax that is based off symbols (rather like APL) - the initial hurdle might be higher, but once that’d done, syntax and semantics are completely decoupled. Then we’d not have flame wars on syntax, rather a more interesting flame war on semantics and pragmatics.

Another line of thinking I had was the hypothetical development of computing and logics in a parallel universe where Chinese was the dominant linguistic paradigms - it’s one that I’ve had since I visited China for the first time.

Combined, these trains of thoughts led to this blog post. So let’s learn some Chinese while learning some (really restricted) functional programming! Bear in mind it’s a very rough unrigorous version.

[Read More]Go For Data Science

This may come as a surprise for many people, but I do a large portion of my data science work in Go. I recently gave a talk on why I use Go for data science. The slides are here, and I’d also like to expand on a few more things after the jump:

[Read More]Data Empathy, Data Sympathy

Today’s blog post will be a little on the light side as I explore the various things that come up in my experience working as a data scientist.

I’d like to consider myself to have a fairly solid understanding of statistics*I would think it's accurate to say that I may be slightly above average in statistical understanding compared to the rest of the population.. A very large part of my work can be classified as stakeholder management - and this means interacting with other people who may not have a strong statistical foundation as I have. I’m not very good at it in the sense that often people think I am hostile when in fact all I am doing is questioning assumptions*I get the feeling people don't like it but you can't get around questioning of assumptions..

Since the early days of my work, there’s been a feeling that I’ve not been able to put to words when I dealt with stakeholders. I think I finally have the words to express said feelings. Specifically it was the transference of tacit knowledge that bugged me quite a bit.

Consider an example where the stakeholder is someone who’s been experienced in the field for quite sometime. They don’t necessarily have the statistical know-how when it comes to dealing with data, much less the rigour that comes with statistical thinking. More often than not, decisions are driven by gut-feel based on what the data tells them. I call these sorts of processes data-inspired (as opposed to being data-driven decision making).

These gut-feel about data can be correct or wrong. And the stakeholders learn from it, becoming experienced knowledge. Or what economists call tacit knowledge.

The bulk of the work is of course transitioning an organization from being data-inspired to becoming actually data-driven.

[Read More]Sapir-Whorf on Programming Languages

Or: How I Got Blindsided By Syntax

Tensor Refactor: A Go Experience Report

May Contain Some Thoughts on Generics in Go

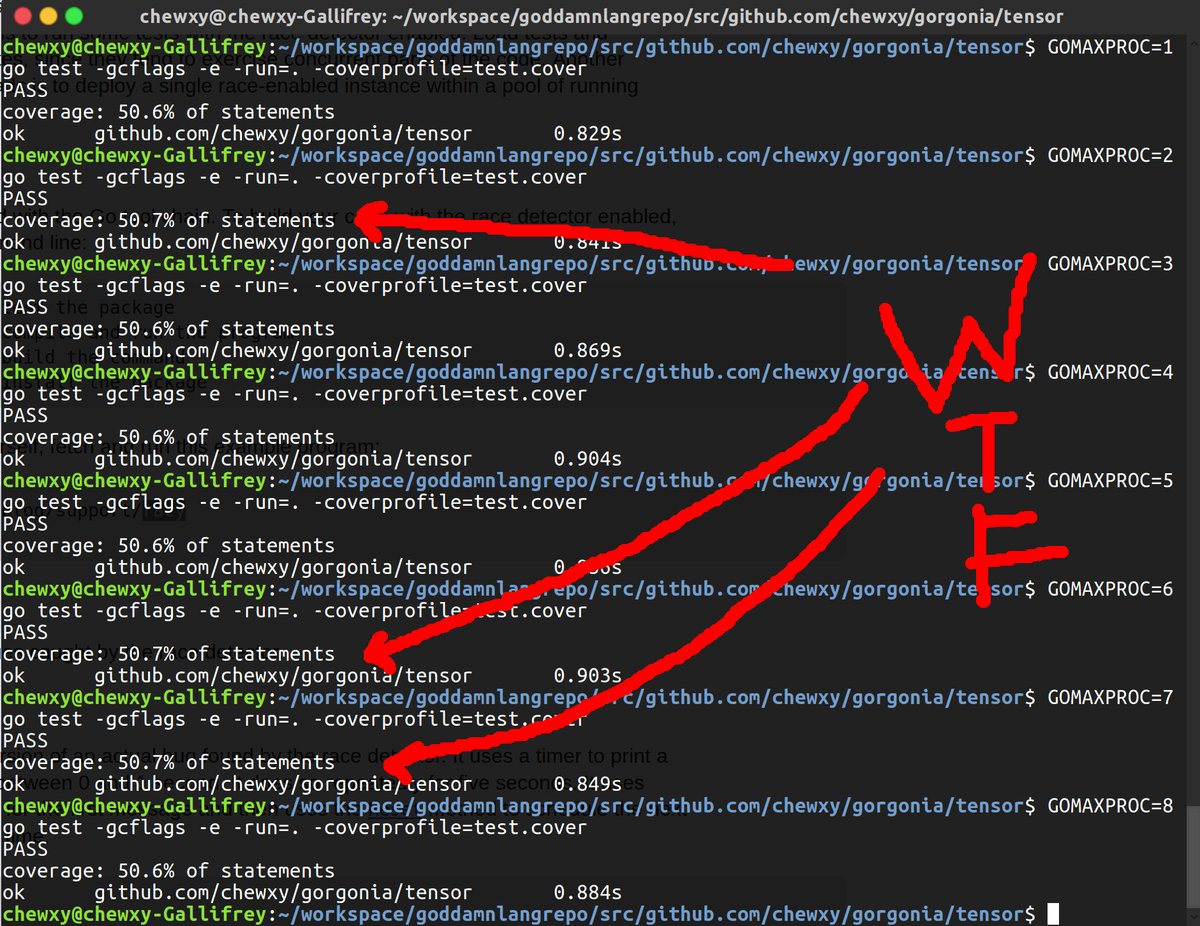

There has been major refactors done to the tensor subpackage in Gorgonia - a Go library for deep learning purposes (think of it as TensorFlow or PyTorch for Golang). It’s part of a list of fairly major changes to the library as it matures more. While I’ve used it for a number of production ready projects, an informal survey of found that the library was still a little difficult to use (plus, it’s not used by any famous papers so people are generally more resistant to learning it than say, Tensorflow or PyTorch).

Along the way in the process of refactoring this library, there were plenty of hairy stuff (like creating channels of negative length), and I learned a lot more about building generic data structures that I needed to.

[Read More]Garbage Collection is Also a Side Effect

21 Bits Ought to Be Enough for (Everyday) English

Or, Bitpacking Shennanigans

I was working on some additional work on lingo, and preparing it to be moved to go-nlp. One of the things I was working on improving is the corpus package. The goal of package corpus is to provide a map of word to ID and vice versa. Along the way package lingo also exposes a Corpus interface, as there may be other data structures which showcases corpus-like behaviour (things like word embeddings come to mind).

When optimizing and possibly refactoring the package(s), I like to take stock of all the things the corpus package and the Corpus interface is useful for, as this would clearly affect some of the type signatures. This practice usually involves me reaching back to the annals of history and looking at the programs and utilities I had written, and consolidate them.

One of the things that I would eventually have a use for again is n-grams. The current n-gram data structure that I have in my projects is not very nice and I wish to change that.

[Read More]