I had a very interesting chat with a few data science students yesterday. Part of the chat involved the idea of statistical modeling. Throughout the chat, it occured to me that the students didn’t have a very good grasp of what modeling is. To their credit, they were proficient in the techniques of linear regression, and deep learning, but I got the sense that they were very much pushing buttons and watching things happen rather than understanding what they were actually doing. There was no sense of a big picture view.

This has been happening quite a lot lately. I find it somewhat alarming. This blog post is a semi-transcript of what I said last night. It aims to be as simple as possible.

What is a Model?

A model is a representation of reality. It’s what we think reality looks like.

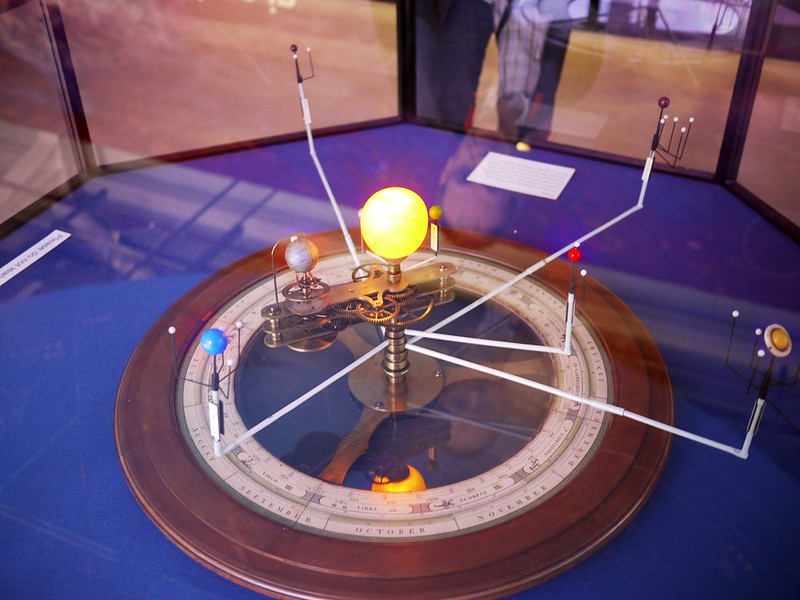

For example, we live on Planet Earth, in a solar system. We can build models of our Solar System. Here’s an example of a model of our Solar System.

An Orrery of our Solar System. Photograph by Smabs Sputzer, published with a CC BY 2.0 licence. Source: https://www.flickr.com/photos/10413717@N08/7527137708

As a child, such models of our solar system endlessly fascinate. I would spend hours thinking about how the planets moved. I would play and watch the planets spin around its spindles. I understood that there was a force called gravity that caused the planets to orbit the sun. As I grew older, the physical model of our Solar System is gradually replaced by three laws.

There are parts of the model we can study:

- What is the shape of the orbits

- How the planets move

- Why do planets move thus

These are sub-models of the model of our Solar System. The shape of the orbit is given by Kepler’s First Law. How planets move is given by Kepler’s Second and Third Law. Why do planets move thus is given by Newtonian mechanics.

Each of Kepler’s laws have an equation governing them. So we can say the equations model our Solar System. The equations are the model of our Solar System. These equations describe something static (the shape of the orbit) and represent something dynamic (how the planets move). What used to be physical motion in a physical model can now be written down on a piece of paper, an equation representing the real thing.

How Do You Make Models?

There are many ways of making a model. Sometimes it’s useful to have a physical understanding of something. I built the 2019-SARS-CoV-2 virion to help me have a better understanding of the coronavirus that caused the pandemic in 2020. Now, I’m no biologist, so my model is crude. My model is extremely physical. Despite this, it gave me an understanding of how a mRNA vaccine might work. It gave me confidence over what actual proper scientists are doing.

So making a physical model is one way. But what if we want something more rigorous? The usual way is to resort to some sort of formalism. Various fields have various formalisms. For example, in chemistry, you use chemical equations. However, the most common formalism would be a mathematical equation. Maths equations are used in physics, economics, biology, and many other fields.

So how do you create an equation that becomes a model of something? There are two ways:

- Generate an equation.

- Find an equation from data.

In the large scheme of things, both of these amount to the same thing: generating an equation. I’ll talk about that in a later section. For now, when I say “model generation” I mean generating an equation that models reality.

How Do You Generate An Equation?

The simplest way of generating an equation is to randomly generate one by writing down symbols on a piece of paper.

That’s daft, you say. You’d be hard pressed to find a equation that adequately describes the situation!

That’s why most model generation comes from first principles. In using the Solar System example, if we accept Newton’s law of universal graviatation ($F = G{\frac {Mm}{r^{2}}}$), then we can work our way to find Kepler’s third law. Kepler’s other laws require other first principles such as trigonometry.

The key is that you understand a subject well enough that you may generate further models about the subject using your basic understanding.

However, random generation has its place. In fact, from here on, whenever I write “generate a model”, you may think of a person randomly coming up with math equations.

How Do You Find An Equation From Data?

There may be cases where first principles may not be used. This is often the case in new fields.

So the next best way is to find an equation from data. There are many ways to do them. Regression analysis is one such way of finding an equation from data. Let’s look at a simple example of linear regression with one variable.

The fundamental idea of a linear regression is that you plot your data points, and draw a straight line through the plot (line A). Each data point would be some distance away from line. Sum those distances up and square them. Call it the “error”. Now draw another line through the plot (line B) and find the errors of B. Keep doing until you find a line that has the lowest amount of errors.

This is the line-of-best-fit. Given that all straight lines on a plot can be described by an equation that looks like $y = mx +C$, the equation that describes the line of best fit (e.g. $y=2x + 1$) is the model.

This idea of model building extends all the way to deep neural networks. They key being the model is built by looking at the relationships between the variables that make up a data point.

How Good Is Your Model?

The whole point of creating a model is to reflect reality. I could well come up with a model of gravity that says this: all objects exert a force on each other that is quadratic on the distance between them - written as $F = d(a, b)^2$. But does this reflect reality? No.

How do I know this? I know this because I can test it. I can collect data, and then check if the data fits my model. In the silly example above, it’s trivial to check with a counterpoint: I am able push something off my desk. If the force is solely based on distance, then as my hand approaches the object, the force should get smaller and smaller to the point that I am unable to affect the object.

In many courses about regression analyses, the R² values are often taught to students as a measure of how good one’s model isI find this to be mostly true about "data science" courses/bootcamps, but not more traditional uni level course on regression/economics/statistics. It’s not! A R² value is how good the fit of data to the line is. In some sense you may think of this as the inverse of “how good is your model” . It’s more “how much data fits in your model”. Indeed, the R² value is indicative of how much variance of your data is covered by the model.

This is not to say that the courses are wrong. The statement that “R² tells you how good your model is” is a very subtle statement. Let’s unpack them. Let’s say you found a line of best fit that is described by the formula $y = 2x + 15$. This is the model that we have “generated”. Now we want to see how much of reality (our dataset) is described by the model. It is in this sense that R² represents the notion of how “good” a model is.

Now it seems a bit weird, given that we used the dataset to find the line of best fit, and then we turn around and say we generated a model, not let’s test to see how good it is. There seems to be a bit of circular logic to it. However there are a lot of theoretical work on why the line of best fit found by a ordinary least squares (OLS) regression is a good model “generator” - the wikipedia article on OLS covers quite a bit, as does the Gauss-Markov theorem article. Most textbooks also lay out proofs of why OLS estimators are BLUE (Best Linear Unbiased Estimator).

Here I want to point out that “reality” is itself just a sample. The dataset that you use to generate a model is just a sample. This is where conscious sampling of data is important. Let’s imagine we are training a machine learning system to recognize faces. My social circle are White or Asian. So if I ask my social circle to send me some photographs of their faces to train a machine learning system, then the machine learning system would not be able to recognize faces that are not White or Asian! Clearly this is not a good representation of reality.

This trivial example only scratches the surface of equitable conscious collection of sample “reality” for the sake of model building. This topic is a very deep topic and it’ll take many blog posts to talk about it. So I shall leave it be for now.

In more advanced machine learning modeling systems (e.g: deep learning systems), it is common to split the dataset into “training” and “testing” datasets. The model is trained on the training dataset and tested on the testing dataset. This is to ensure that the model does not only model “reality” that is in the training dataset, but can also generalize to previously unseen data.

What I am trying to convey here is that sanity checks against reality is a good thing. We should do them more often.

All Models are Wrong

Having said that, we have to accept that models are just that - models. They are not reality. George Box had a good saying:

All models are wrong. But some are useful.

The key is to find a model that is useful enough for what you need to do. Let the natural philosophers worry about the most accurate models of reality.

Conclusion

Humanity is always generating models. Individually, in our brains, we generate internal models that are corrected every second of the day. Consider catching a ball. Your brain generates a model of physical reality - no equations here - telling us where the ball is going to be. As the ball arcs through the air, we update the models in our brain, getting better and better predictions, resulting in us catching the ball. Or in my case, the ball lands on my face.

Communally, we generate models too. The invention of writing and speech allowed us to share models with other individuals. We started using things like maths equations to make our meanings clear. Our models get refined over generations. Take gravity for example. For centuries, our best model of gravity is Newton’s law of universal gravitation. Then along came Einstein and changed the way we describe gravity. Both these models of gravity are correct in the sense that they adequately describe the phenomenon of gravity. The resolution at which they describe gravity is what makes the models different.

I hope I have given you a big picture view of what modeling is. This post isn’t terribly rigorous because it aims to give that big picture. There have been many things elided for the sake of an abstract view. If you need further explanations I will be more than happy to explain them.