This post was originally a series of tweets I wrote on a dream I had last night. For posterity I’ll be re-describing the dream here. Words might be slightly different from the tweets given the lack of character restrictions.

I had a dream last night. It was a weird dream. I dreamt that all human languages could be shrunk down into a CBNF (C is for Contextual, or Chewxy)*There aren't such things as CBNF. It was a pure figment of imagination. In the dream, the CBNF for English was about 200KB, whilethe CBNF of Chinese was about 190KB. More importantly, the CBNF for a language describing all languages - a meta language - was only about 10KB.

The dream made me feel weird. It is a kinda uneasy yet taken-for-granted feeling. If all of human language can be compressed as 10KB*which is quite a lot!, then if given a functioning recursive generator, what does it mean? It felt limiting yet limitless.

I would imagine this was what Cantor felt like when he first discovered that there are many infinities.

Anyway, the rest of this blog post will be dedicated to breaking down the dream. As such the content of this post will feel a lot more scattered - there isn’t really a main takeaway point.

Quick Primer: BNFs

BNF is the usual acronym. A BNF is a notation used to describe the rules of a language (a.k.a the grammar). You can use a BNF to generate all the possible words and sentences of a language. I’ve used it several times on this blog. Here’s three examples of a BNF:

Example 1: Pure λ-Calculus

$$ \begin{aligned} T, U &::= x\ |\ \lambda x.T\ |\ (T\ U) \end{aligned} $$

This is the usual BNF for the pure λ-calculus. It’s also one of the simplest to understand. It says $T$ and $U$ are (meta)variables that can each be either $x$ (a variable), or $\lambda x.T$ (an abstraction) or $(T\ U)$ (an application of two terms).

$T$ and $U$ are called terms. Anything generated from a BNF is called a term. A term may be comprised of multiple terms, as seen in the rule $\lambda x.T$ and $(T\ U)$.

You may notice that the definition is recursive - $T$ is defined by rules that use $T$ (i.e. the rules $\lambda x.T$ and $(T\ U)$). This notation may be a bit confusing. Alas, such is the nature of using metavariables.

The main reason for using two (meta)variables is to distinguish that the two sub-terms in $(T\ U)$ have different values.

With this BNF we can generate a whole programming language. Our random recursive generation process is recursive, with an (admittedly crappy) algorithm as follows:

Function Gen: () → Term

Use a random number generator to pick a rule.

Switch on which rule was picked:

Case x: GenerateVar

Case λx.T: GenerateAbs

Case (T U): GenerateApp

Function GenerateVar: () → Var

Pick a previously unsed variable and return it.

Function GenerateAbs: () → Abs:

A ← Gen()

Return λx.A

Function GenerateApp: () → App:

X ← Gen()

Y ← Gen()

Return (X Y)

import System.Random

import Control.Monad.IO.Class

data L = Var Char | Abs Char L | App L L --deriving Show

instance Show L where

show (Var x) = [x]

show (Abs x t) = "λ"++[x]++"."++(show t)

show (App t u) = "("++(show t)++" "++(show u)++")"

rand x = do

g <- getStdGen

let (r, g2) = randomR x g

setStdGen g2

return r

genChar :: MonadIO m => m Char

genChar = rand ('a', 'z')

genVar :: MonadIO m => m L

genVar = genChar >>= \x -> pure (Var x)

genAbs :: MonadIO m => m L

genAbs = do

x <- genChar

t <- gen

return (Abs x t)

genApp :: MonadIO m => m L

genApp = do

t <- gen

u <- gen

return (App t u)

gen :: MonadIO m => m L

gen = do

r <- rand (0, 2) :: MonadIO m => m Int

case r of

0 -> genVar

1 -> genAbs

2 -> genApp

package main

import (

"fmt"

"math/rand"

)

type L interface{ String() string }

type Var rune

type Abs struct{ X Var; T L }

type App struct{ Fst, Snd L }

func (x Var) String() string { return fmt.Sprintf("%c",rune(x)) }

func (λ Abs) String() string { return fmt.Sprintf("λ%v.%v", λ.X, λ.T)}

func (a App) String() string { return fmt.Sprintf("(%v %v)", a.Fst, a.Snd)}

func genVar() Var { return Var(97+rand.Intn(26)) }

func genAbs() Abs {

x := genVar()

t := gen()

return Abs{x, t}

}

func genApp() App {

t, u := gen(), gen()

return App{t, u}

}

func gen() L {

switch rand.Intn(3) {

case 0:

return genVar()

case 1:

return genAbs()

case 2:

return genApp()

}

panic("Unreachable")

}

func main() { fmt.Println(gen()) }

Now, the generated term will be a valid term. But they are most likely to be nonsensical programs. Here’s a few examples - all of which are valid λ-calculus terms, but under the usual reduction rules, are completely nonsensical:

λf.λm.k

(l (λg.λa.λc.(λa.(λw.t (((u λx.λx.λq.d) f) (s f))) c) λo.λf.λr.λw.λp.d))

λa.(v k)

c

Example 2: Natural Numbers

$$ \begin{aligned} N &::= 0\ |\ \llbracket N+1 \rrbracket \end{aligned} $$ This is a definition of the natural numbers. The double brackets ($\llbracket \cdot \rrbracket$) indicates that it’s the computed result. So if $N$ is 0, then the next number generated is (0 + 1) = 1. An alternative formulation of this rule is usually written as $Succ\ N$. But since I will not be introducing any arithmetics, it’s fine to use $\llbracket N+1 \rrbracket$ as there will be no confusion of symbols.

While we can recursively generate numbers in the same way the terms of pure λ-calculus were generated in Example 1, we can also inductively generate new numbers, by starting at 0, then generating all the natural numbers in the world by recursively applying the $\llbracket N + 1 \rrbracket$ rule.

I use the word “inductively” to mean that you can generate new terms from an existing “base” case. In the example of natural numbers, the base case is 0.

Example 3: Pseudo-English

This is the definition of a sample English-like language. Like Example 1, we may recursively generate sentences. But note - once we have gotten a $NVM$ sentence, we may also inductively generate new sentences using that $SCT$ rule.

Here’s what I mean. Start by generating a sentence using the $NVM$ rule - “I eat food” . Now we can use the $SVT$ rule to generate new sentences inductively. The sequence goes as follows (parenthesis inserted to show the process of generation):

- “I eat food”

- “(I eat food) and (I eat food)”

- “(I eat food and I eat food) or (I eat food and I eat food)”

- “(I eat food and I eat food or I eat food and I eat food) or (I eat food)”

- …

This process can go on infinitely. Funnily in linguistics this sort of behaviour is called recursion. While this makes sense - one is applying the rules recursively - I have construed this type of behaviour as inductive - in the sense that you start from a base case. The terminology du jour in linguistics for starting with a base case is called Merge.

BNFs Are For Context-Free Languages

The one last thing I haven’t told you about BNF is that BNFs can only represent context-free languages.

For context-sensitive languages, context-sensitive grammars are used. These grammars are usually some form string rewriting systems. These are particularly interesting - so interesting that Stephen Wolfram wrote a whole book purporting that all of physics is built on such a system.

Human languages are usually considered to be beyond context-sensitive - they’re unrestricted. Of which the grammars of unrestricted languages are equivalent to Turing machines (and lambda calculus, if you subscribe to the Church-Turing Thesis).

Wait, What?

OK, so the grammars of unrestricted languages are equivalent to Lambda Calculus. But we just described the pure λ-calculus*If you are confused about why I write "lambda calculus" and "λ-calculus" differently, this is the explanation. "Lambda calculus" refers to a class of calculi that is derivative of the original, the pure λ-calculus. Think "a lambda calculus" vs "the λ-calculus. The pure λ calculus is a lambda calculus." using a BNF!

Here’s a particularly subtle point that took me years to internalize*I have never quite written it down, so it was actually nice to write this, even if it's just for myself.: BNFs are grammar. Grammars generate sentences/expressions. A set of expressions is called a language.

Now let’s use English (an unrestricted language) as an example. We start with this statement:

There are no BNFs that can generate English. BNFs are context-free grammars. Hypothetically, only unrestricted grammars can generate English.

To make this clear, I’m going to replace “unrestricted grammar” with “set of lambda calculus terms”:

There are no BNFs that can generate English. BNFs are context-free grammars. Hypothetically, there is a set of lambda calculus terms that can generate English.

To make this a bit clearer, I’m going to replace “set of lambda calculus terms” with “Turing machine”:

There are no BNFs that can generate English. BNFs are context-free grammars. Hypothetically, there is a Turing machine that can generate English.

To make this even clearer, I’m going to replace “Turing machine” with “computer program”:

There are no BNFs that can generate English. BNFs are context-free grammars. Hypothetically, there is a computer program that can generate English.

A computer program = A Turing machine = A set of lambda calculus terms = An unrestricted grammar*The equals sign here is a very rough and non-rigorous notion of equivalence. These things are not equal, and equivalence is still mostly a theory, some stronger than others..

The subtle point is this:

A set of lambda calculus terms ≠ BNF of a lambda calculus.

A Machine By Any Other Name

A simple BNF can generate an infinite number of terms. Let’s say one of these terms represents a Turing Machine that is capable of generating one natural language. How big would this Turing Machine be? This question is quite ill-posed as it’s quite difficult to describe TMs using BNFs. But we may ask equivalently, how big would a lambda calculus term (think computer program) be such that it may generate one natural language.

I would posit that it’s not going to be very large. In my dream it’d be about 200KB, compressed. It can be that small because the machinery required to generate a language isn’t included. It’s just a blueprint.

On Compression and Machines

Let’s say I want to store a string - say “FFFFFFFUUUUUUUUUUUU”. That’s at least 19 bytes required. I can write the compressed form - “F7U12”. That takes at least 5 bytes. But to get the full meaning of the string, I’d have to have machinery that transforms “F7U12” to “FFFFFFFUUUUUUUUUUUU”. That machinery will definitely be bigger than 19 bytes.

This leads to a more interesting question: is it possible to build a decompression machine that is smaller than the compressed form?

And with the straying into Kolmogorov complexity, I think I should end this section.

From Infinites Comes Finities

I don’t think a natural language would ever have such a small BNF. But what if? It’s a weird feeling to have.

Consider a BNF that generates expressions involving natural numbers. It would generate things like 1 + (-1). There would be an infinite number of expressions. Now let’s say that this BNF is carefully written (perhaps in some sort of dependently typed language) such that the expressions generated would always evaluate to 0, 1 or 2 *There's a category theory joke in there somewhere. Probably involving a null object, initials and duals. .

If that’s not weird enough, consider the following: What if you have a BNF, A that generates terms which when evaluated*Recalling that evaluation requires a machine, generates BNFs B that does what A does. Now B is a set. But what if the size of the set B is exactly 1 and the element is exactly A? What if it’s not A?

Indescribable Feeling

Allow me to ask the question: what is the relationship between a machine and its language? There is a sense of incompleteness or paradox - that the answer is either unknowable or something that would make one feel big and small at the same time. You would always need some sort of “meta” to encompass the current system. And I start having issues if I am in deeper than 4 or 5 layers of “meta”.

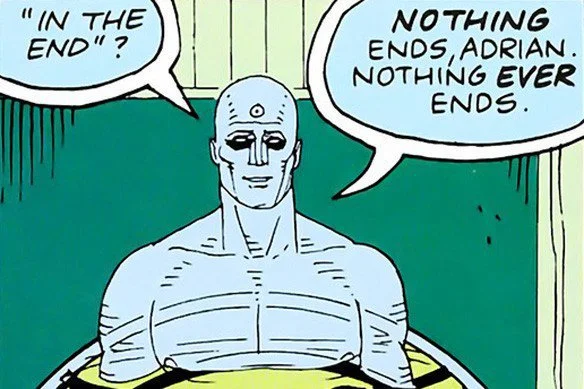

That I use the words “incompleteness” and “paradox” is no accident. Chaitin’s incompleteness theorem and Berry’s paradox were exactly what I was referring to. The feeling that infinities can be generated from finities, as well as finitoes that can be generated by infinities. Except in dreamland, it felt a lot more visceral. It’s an indescribable feeling.

Oh, on that note, “indescribable feeling” itself is also one of those Berry’s paradox things …